Veeam Backup & Replication 12.3 New Features and Download

Veeam is undoubtedly a leading name in the backup and recovery industry. Trusted by countless organizations and individuals, including myself, it has proven its reliability for both production workloads and home labs. With Veeam Backup & Replication 12.3, the platform builds on its renowned features, offering administrators even more tools and enhanced compatibility with the latest technologies. Let’s dive into the new features and capabilities of Veeam Backup & Replication 12.3!

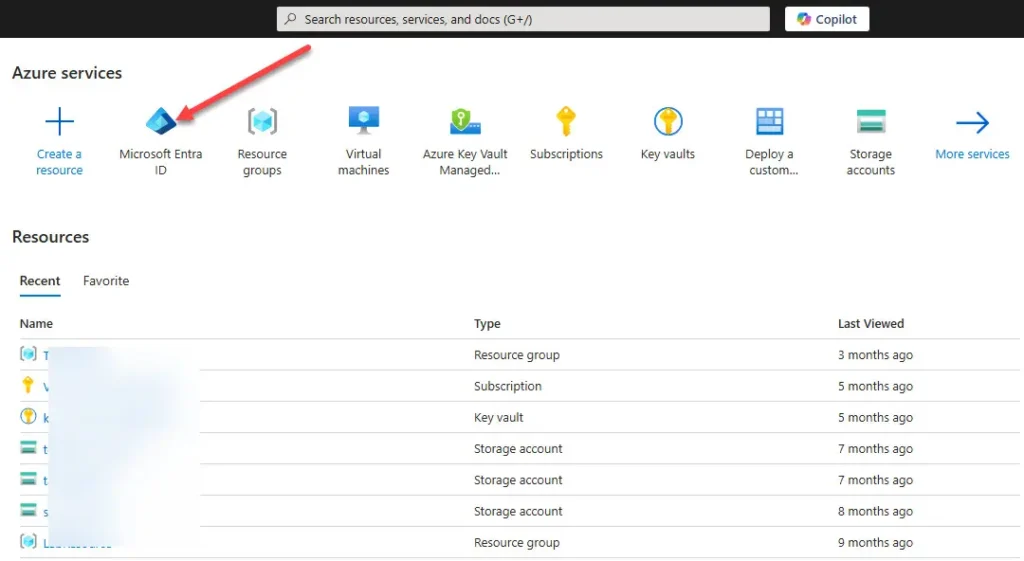

Microsoft Entra ID backup

Microsoft 365 and other connected services are widely embraced by enterprises today. Microsoft’s centralized cloud identity solution, formerly known as Azure Active Directory (AAD), has been rebranded as Microsoft Entra ID.

Since Entra ID is a core component of an organization’s identity in the Microsoft cloud, Veeam now offers robust backup and restore capabilities for Microsoft Entra ID. This ensures businesses can safeguard their Identity and Access Management (IAM) data within hybrid architectures.

The solution includes change detection, enabling administrators to identify and reverse unauthorized modifications. This capability is invaluable for protecting critical configurations against human errors or cyberattacks from malicious actors.

The automated backups simplify environment protection, ensuring compliance with requirements. Role-based access ensures data recovery aligns with existing IAM permissions, preventing accidental overwriting of data.

New technology compatibility

With this release, Veeam stays aligned with Microsoft’s latest operating systems. Veeam Backup & Replication now fully supports Windows Server 2025 and Windows 11 24H2, ensuring complete compatibility with Hyper-V on these platforms.

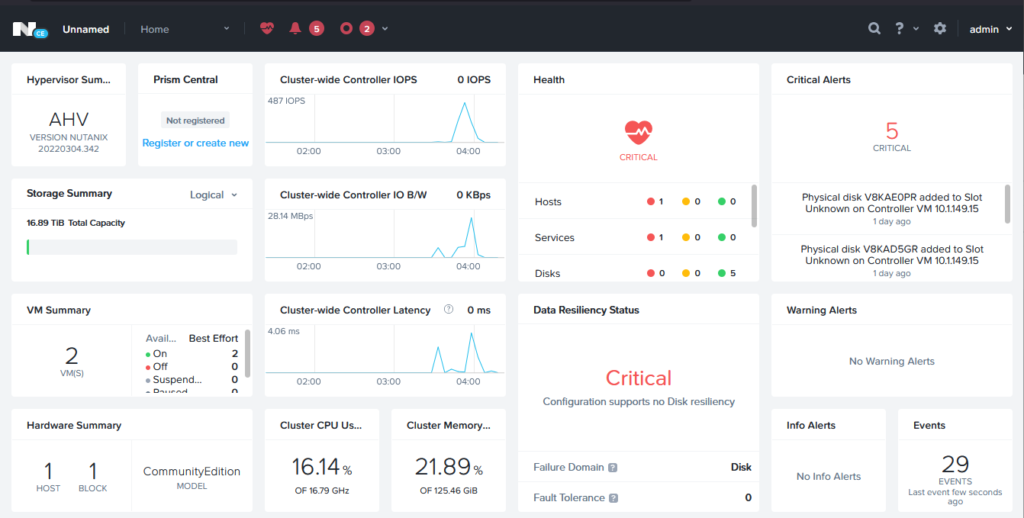

Nutanix AHV improvements

Also, in addition to the new Microsoft platforms, Veeam has expanded its coverage of Nutanix AHV. There is now experimental support for application-aware processing and inline malware detection for Nutanix AHV environments.

This will help admins to have visibility into possible infections of Nutanix virtual machine guest files and also proactively stop ransomware threats.

MongoDB 8, PostgreSQL 17, and Oracle RMAN

Veeam Backup & Replication 12.3 introduces exciting new features for MongoDB 8, PostgreSQL 17, and Oracle RMAN. These enhancements deliver improved application consistency, accelerated data recovery, and seamless integration with database platforms.

Better security

A standout addition to Veeam Backup & Replication is the Indicators of Compromise (IoC) Detection feature. Leveraging technologies like file system indexing, it scans for potential threats such as hacker tools or abnormal file activity. This enables proactive threat detection and significantly reduces the Mean Time to Detect (MTTD).

The Veeam Threat Hunter tool introduces advanced features beyond traditional YARA rule scans and signature-based antivirus detection. Leveraging machine learning and heuristic analysis, it identifies sophisticated threats like polymorphic malware, significantly enhancing Veeam’s security capabilities during data recovery in potentially compromised scenarios.

The new Backup Encryption Strength Analyzer validates the complexity of encryption passwords, ensuring adherence to best practices for compliance and improving overall security.

Enhanced NAS backup capabilities include improved retry logic and optimized caching, delivering better performance particularly advantageous for deduplicating storage systems.

Veeam Data Cloud Vault

The Veeam Data Cloud Vault has been enhanced to deliver a more streamlined and secure solution. Businesses can now store immutable and encrypted backups in the cloud, ensuring data integrity with an impressive durability rating of up to 12 nines.

Additionally, Veeam has introduced two new pricing plans, allowing businesses to align their cloud storage costs with their recovery requirements. Vault management is now seamlessly integrated into the Veeam Data Platform, simplifying onboarding and monitoring for offsite backups.

NAS backups have also been improved with better retry logic and enhanced caching, significantly boosting performance especially for deduplicated storage systems.

Advanced Continuous Data Protection (CDP)

Continuous Data Protection (CDP) has become an essential feature for businesses where data integrity, uptime, and zero data loss are paramount. With Veeam Backup & Replication 12.3, CDP capabilities have been enhanced, including short-term retention options that allow selecting retention periods from one to seven days. This ensures robust data coverage during extended weekends or holidays.

Policy cloning has also been introduced, enabling the creation of consistent CDP policies more efficiently. This feature saves time, helps businesses meet their SLAs outlined in disaster recovery plans, and allows IT administrators to protect critical workloads while adapting to changes without compromising recovery outcomes.

Additionally, NAS backups now benefit from improved retry logic and enhanced caching, delivering a significant performance boost. This is particularly advantageous for environments using deduplicating storage systems.

Improved backup times

One of the standout improvements in Veeam Backup & Replication 12.3 is significantly faster backup performance. The Instant VM Recovery feature has been optimized to handle large-scale recovery scenarios, allowing you to restore up to 200 VMs at speeds up to four times faster than previous versions. This enhancement is a game-changer during major disasters or widespread data loss events.

Additionally, Veeam Backup & Replication now integrates with NetApp SnapDiff, enabling efficient backups of unstructured data. By directly tracking file changes, this integration ensures faster and more streamlined incremental backups.

Automation and integration

Veeam Backup & Replication 12.3 has new PowerShell and REST API enhancements. The newly improved enhancements help give admins better tools to manage backups and recovery.

Admins can also use the new Data Integration API. It allows them to access backup data from any server without installing additional software or applications.

Wrapping up

Veeam Backup & Replication 12.3 introduces a host of valuable new features that organizations will undoubtedly appreciate, making the upgrade well worth the effort. Veeam is clearly prioritizing security, which has become a critical focus for all data protection vendors in today’s landscape. Backup providers are increasingly evolving into security companies out of necessity.

The new support for backing up Microsoft Entra ID is a significant enhancement, especially as it serves as the backbone of identity and access management for organizations leveraging Microsoft cloud solutions. Additionally, the inclusion of support for Windows Server 2025 and the latest Nutanix features are noteworthy, particularly as many businesses explore alternatives to VMware vSphere. These updates make Veeam 12.3 a compelling choice for modern data protection needs.

Relevant links

Below are the relevant links for Veeam Backup & Replication 12.3:

Download: Veeam Backup & Replication