Shutdown Proxmox cluster with Ceph storage

To shutdown Proxmox cluster and prevent data loss or corruption, especially when Ceph storage is in use, you must follow a specific procedure.

When working with a high-availability infrastructure, performing maintenance tasks can be a delicate process. Shutting down a cluster, even for a planned event like a power outage or hardware upgrade, requires careful execution to prevent data corruption and unexpected downtime.

This guide will walk you through the correct procedure for gracefully shutting down a Proxmox cluster with a Ceph storage backend, ensuring that all services and data remain intact.

Shutdown Proxmox cluster with Ceph storage

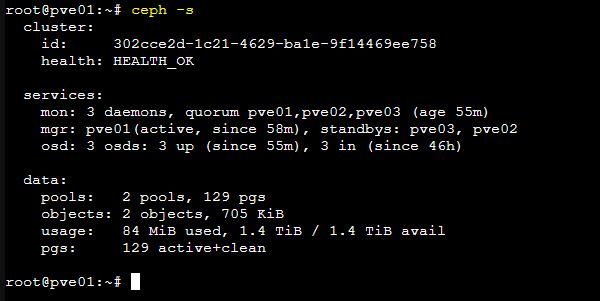

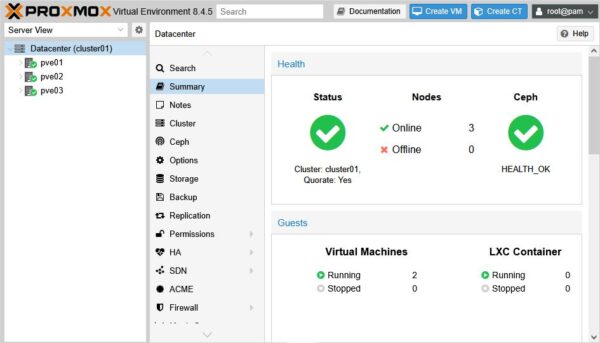

Before proceeding with the actual shutdown, ensure the Ceph cluster is in a healthy state.

# ceph -s

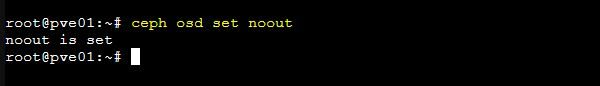

To prevent Ceph storage from automatically marking an OSD (Object Storage Daemon) as out when it goes offline, the noout flag must be set to stop the cluster from triggering a data rebalancing process.

Without the noout flag, Ceph’s default behavior is to mark an unresponsive OSD as out after a short timeout (typically 5 minutes), and then begin to re-replicate the data from that OSD to others in the cluster. This can be a waste of resources and cause unnecessary performance degradation if the OSD is expected to come back online shortly.

Run the command:

# ceph osd set noout

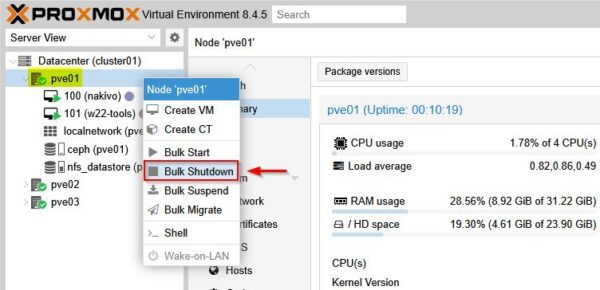

Shutdown all VMs and Containers.

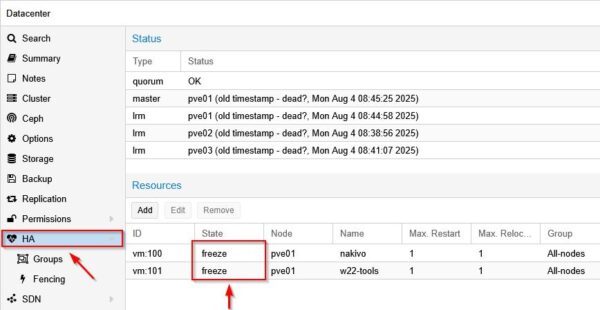

If your infrastructure uses the HA capability, you should disable the HA manager during a planned shutdown or maintenance of a Proxmox node. It prevents the HA manager from performing unnecessary and disruptive actions, such as migrating services, when you are intentionally taking a node offline.

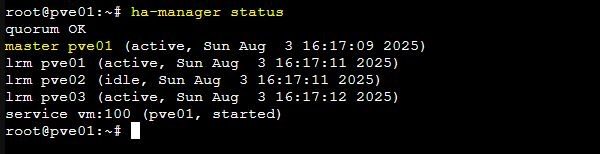

Check the status of the HA manager.

# ha-manager status

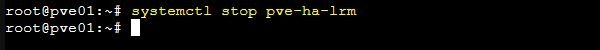

On each node stop the pve-ha-lrm service to prevent each node from trying to take action on its local services. This service manages the local VMs and containers and execute the commands it receives from the CRM. If you only stop it on one node, the other nodes will still be running their LRM services and the CRM will be making decisions.

# systemctl stop pve-ha-lrm

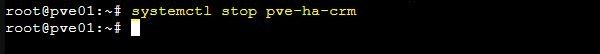

Once the LRMs are stopped on all nodes, you can stop the CRMs on each node. This service also runs on every node, but only one node at a time is the master CRM. This master is the decision-maker for the entire cluster.

If you stop the master CRM but the LRMs are still running, a new master will be elected and it will continue to manage the highly available resources, potentially overriding any manual changes you’ve made.

# systemctl stop pve-ha-crm

Stopping both services on all nodes effectively freezes the high-availability stack for the entire cluster

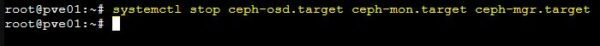

Now all Ceph services must be stopped. Run the following command on each node:

# systemctl stop ceph-osd.target ceph-mon.target ceph-mgr.target

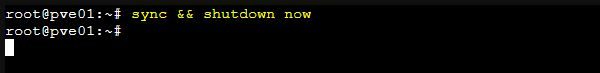

Run the sync and shutdown commands on all nodes. The sync command ensures pending disk writes are flushed. Wait for each node to fully shutdown before moving to the next.

# sync && shutdown now

The three nodes have been shutdown.

Power on the cluster

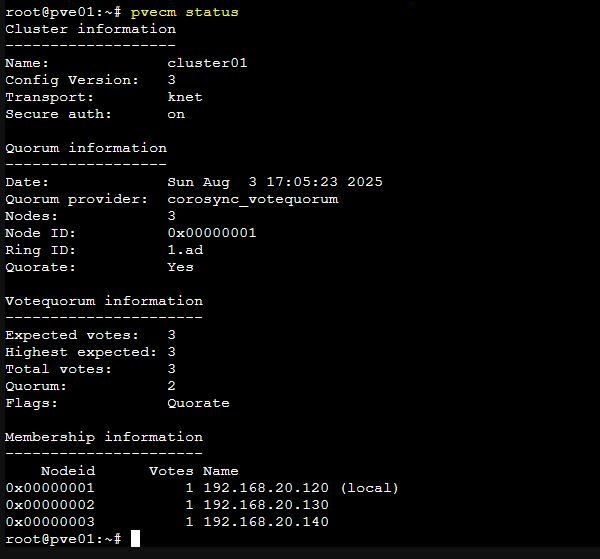

After shutdown Proxmox, there is no specific sequence to follow when powering on the physical nodes to restore cluster functionality.

After the nodes have powered on, wait for them to form a cluster quorum.

# pvecm status

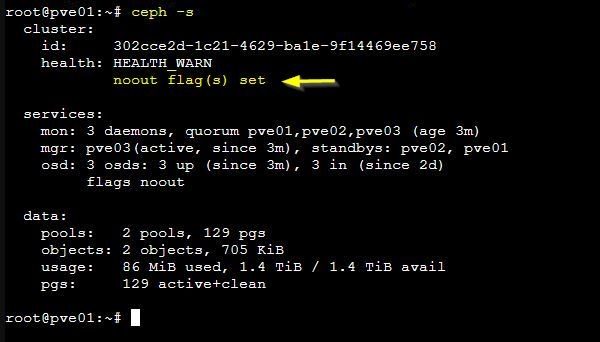

Check the Ceph storage cluster health. Note the noout flag is reported as set.

# ceph -s

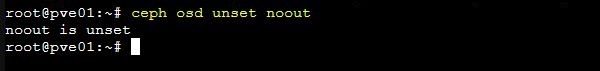

Since the noout flag was set during the shutdown procedure, you must unset the noout flag.

# ceph osd unset noout

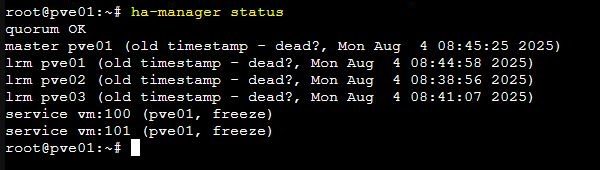

The HA status check reports that it is not active.

# ha-manager status

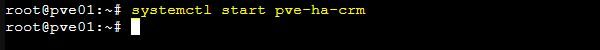

When the Proxmox cluster is powered on, the HA service must be reactivated in the reverse order.

On each node run this command to enable the CRM service.

# systemctl start pve-ha-crm

The Proxmox cluster is now up and running.

Following this procedure, you can safely shutdown Proxmox with Ceph without risk of data loss or corruption.