Understanding NVIDIA vGPU Time-Slicing Policies: Best Effort vs Equal Share vs Fixed Share

Table of Contents

GPUs are often the priciest components in today’s server infrastructure especially when powering AI, machine learning, or demanding graphics workloads. Because of this, it’s essential to maximise their usage to get the best return on your investment.

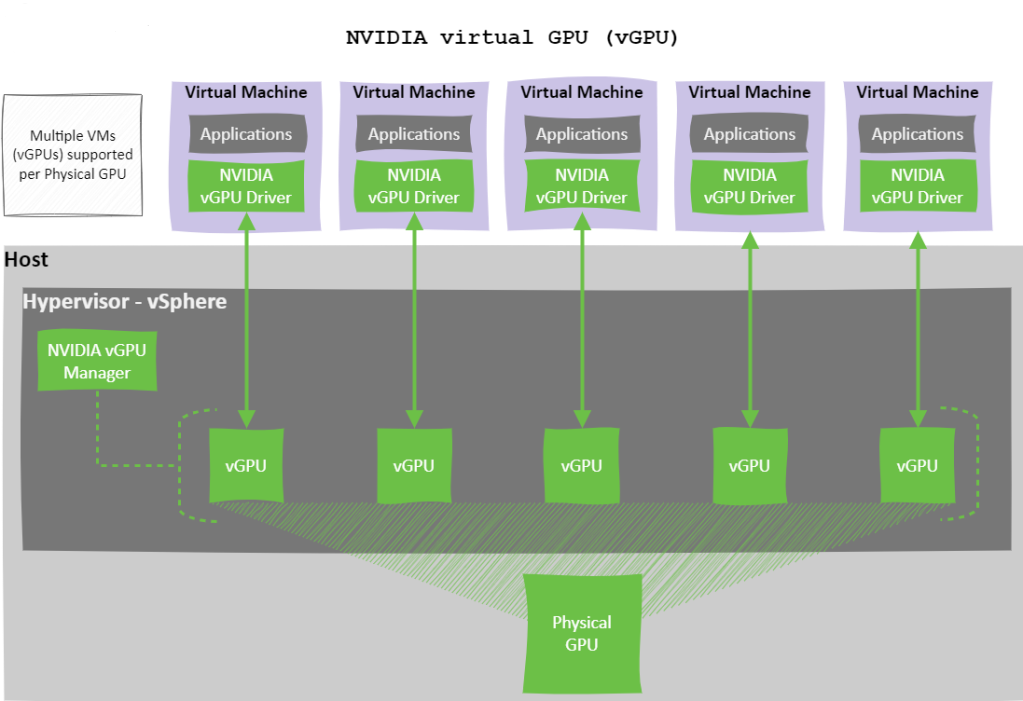

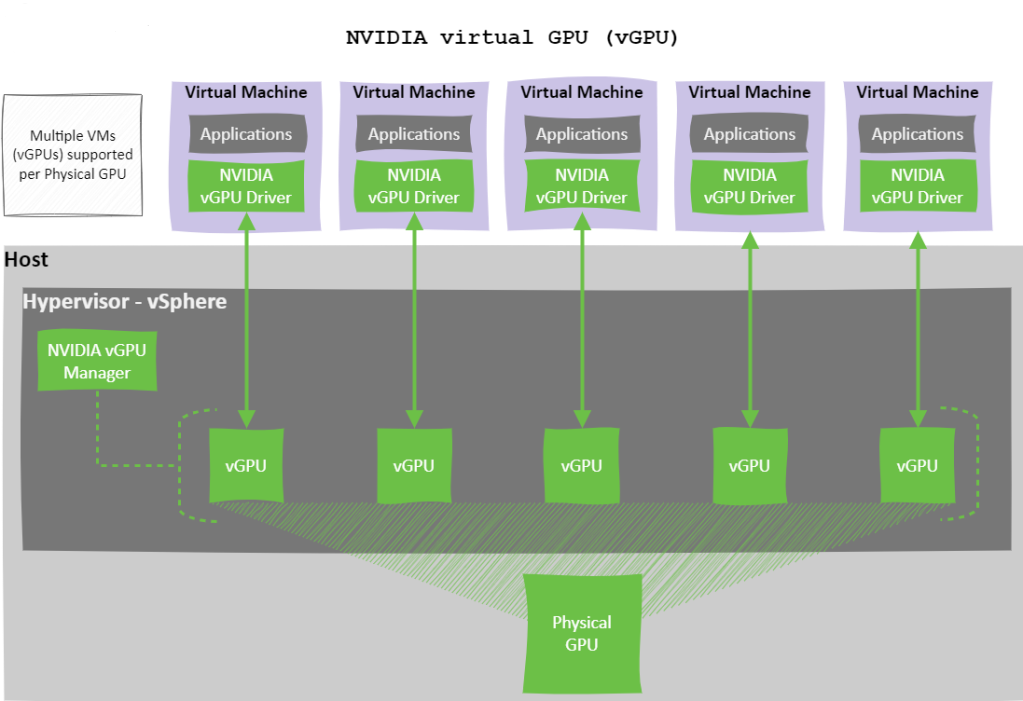

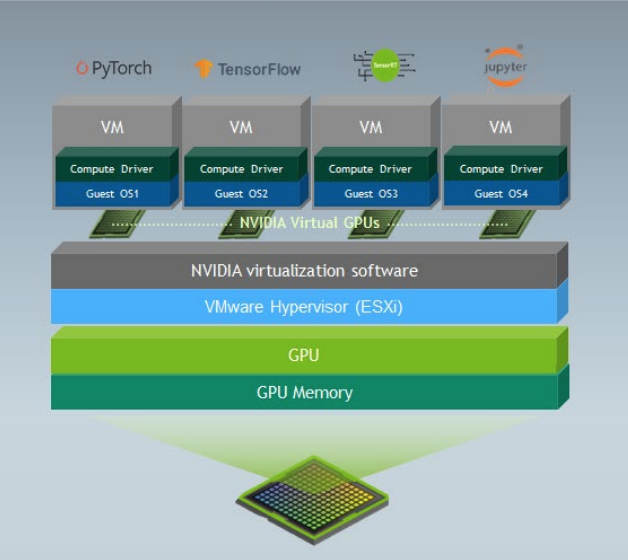

Often, a single workload doesn’t fully utilise the capacity of a physical GPU (pGPU). Instead of letting that valuable compute power go to waste, NVIDIA vGPU technology allows you to split one pGPU across multiple virtual machines (VMs), improving consolidation, boosting efficiency, and reducing costs.

However, when sharing a GPU, how you partition and schedule access between virtual GPUs (vGPUs) directly impacts performance, user experience, and workload fairness.

In this blog post, we’ll explore NVIDIA’s Time-Slicing approach—one of two native GPU partitioning methods —with a deep dive into the three supported Scheduling Policies and how time-slice duration can be tuned to align with specific workload characteristics.

NVIDIA vGPU: Time-Slicing vs MIG

NVIDIA offers two native methods for partitioning a GPU:

- Time-Slicing (software-based)

- Multi-Instance GPU (MIG) (hardware-based)

This post will focus on Time-Slicing, how it works, and the different scheduling policies available.

What is Time-Slicing?

Time-slicing is a software-based technique that allows GPU compute resources to be shared across multiple virtual GPUs (vGPUs). With time-slicing, a physical GPU can only execute one vGPU task at a time. The GPU scheduler assigns each vGPU a slice of time in which it can execute, with other vGPUs waiting in a queue for their turn.

This sequential processing means that performance can vary depending on how time slices are allocated and how busy each vGPU is.

Time-Slicing Scheduling Policies in NVIDIA vGPU

NVIDIA vGPU offers three Time-Slicing Scheduling Policies that determine how GPU compute resources are shared among virtual GPUs (vGPUs) on a physical GPU (pGPU). Understanding these policies is crucial for ensuring performance, fairness, and efficient utilisation in virtualised GPU environments.

The three scheduling policies are:

- Best Effort (default)

- Equal Share

- Fixed Share

Each policy is detailed below.

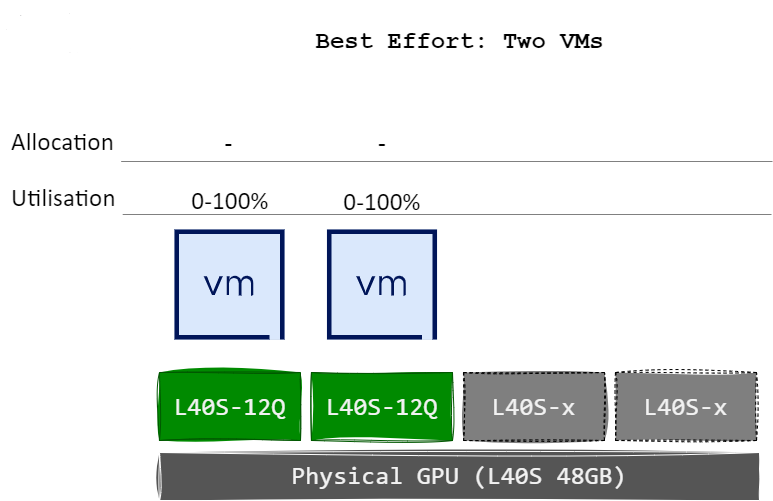

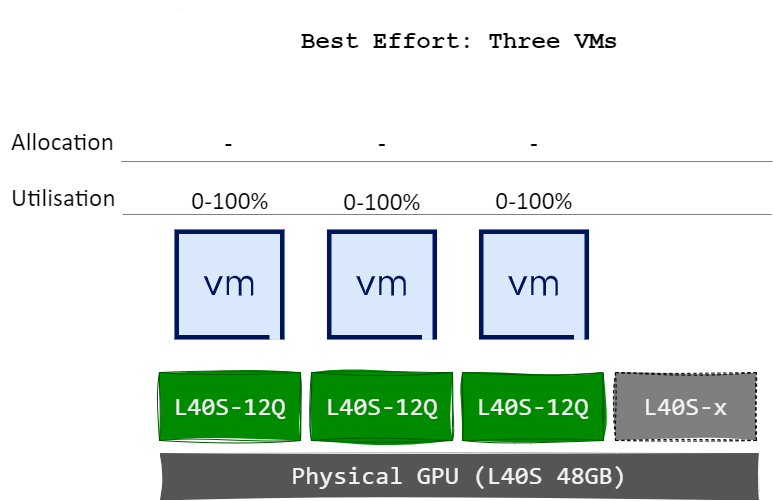

Best Effort

Best Effort is the default scheduling policy and uses a round-robin approach to distribute pGPU resources based on real-time demand. It aims to maximise GPU utilisation by allowing vGPUs to consume any available compute cycles when others are idle.

However, this policy can suffer from the “noisy neighbour” problem, where a single vGPU may dominate GPU resources, leading to performance degradation for other workloads.

Key Characteristics:

- No fixed GPU allocation per vGPU

- vGPUs can consume from 0% up to 100% of the pGPU based on availability

- Ideal for environments where maximum GPU utilisation is the priority

Illustration:

The diagram below illustrates that none of the virtual machines vGPUs have fixed GPU allocations, allowing each vGPU to consume anywhere from 0% to 100% of the pGPU’s compute capacity, depending on real-time demand.

This behaviour remains unchanged even when an additional VMs or vGPUs are assigned to the pGPU.

The diagram below illustrates a scenario in which the physical GPU is fully utilised; however, the distribution of compute resources between vGPUs and their respective virtual machines is unbalanced.

Equal Share

Equal Share ensures fairness by dividing GPU compute resources equally among all active vGPUs on a pGPU. As new vGPUs are added or removed, the system recalculates allocations to maintain equal distribution.

While this method avoids resource starvation and the “noisy neighbour” issue, performance can vary depending on the number of active vGPUs, as each receives a smaller share with increased utilisation.

Key Characteristics:

- Even distribution of compute time across vGPUs

- Dynamically adjusts as vGPUs are added or removed

- May lead to underutilisation if some vGPUs are idle or lightly loaded

Illustration:

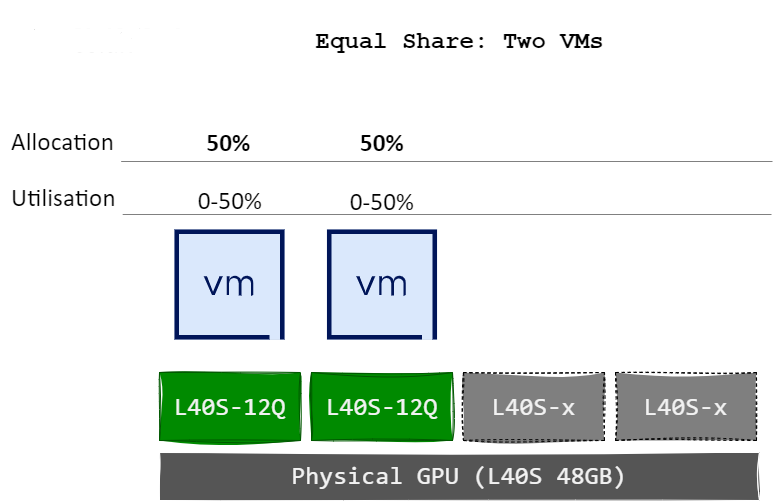

The diagram below illustrates how each virtual machines vGPUs receives an equal allocation of the pGPU’s compute capacity. When two VM/vGPUs are active, each is assigned 50% of the pGPU’s resources.

Upon the assignment of a third VM/vGPU to the same pGPU, the allocation is automatically recalculated, resulting in 33% of compute capacity being allocated to each vGPU.

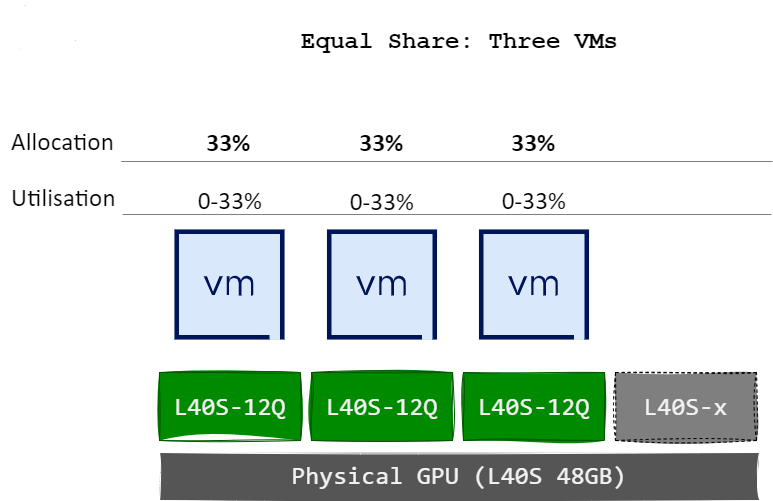

The diagram below illustrates a scenario in which compute resources are allocated equally among the active vGPUs assigned to the pGPU. If a virtual machine does not fully utilise its vGPU allocation, due to inactivity or low demand, idle time is introduced on the pGPU, resulting in underutilisation. However, this scheduling policy avoids the “noisy neighbour” effect, as all vGPUs are guaranteed an equal share of pGPU compute resources regardless of workload intensity.

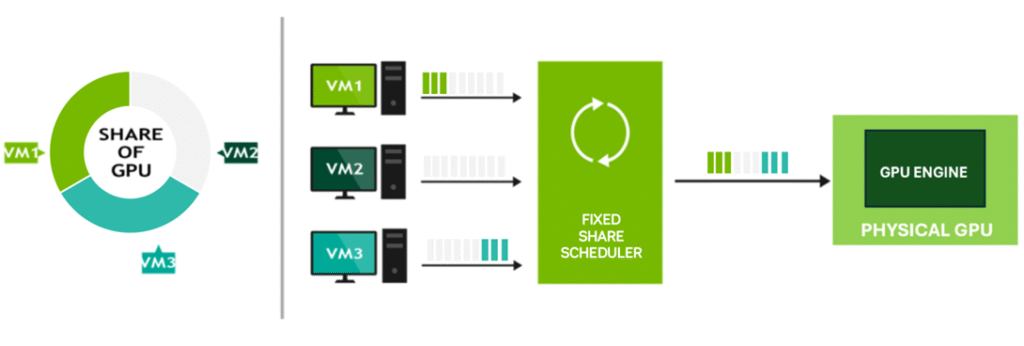

Fixed Share

Fixed Share allocates GPU resources based on the vRAM size of the first vGPU profile assigned to a pGPU. The compute resources are divided into fixed slices, based on the max number of those profiles that will fit on the pGPU, each corresponding to the proportion of total vRAM allocated by the vGPU profile.

This approach provides deterministic and consistent performance, making it well-suited for performance-sensitive workloads that require predictable behaviour.

Key Characteristics:

- Fixed compute allocation based on vGPU profile size

- Ensures consistent performance

- Avoids “noisy neighbour” effects

- May result in underutilisation if vGPUs are idle

Illustration:

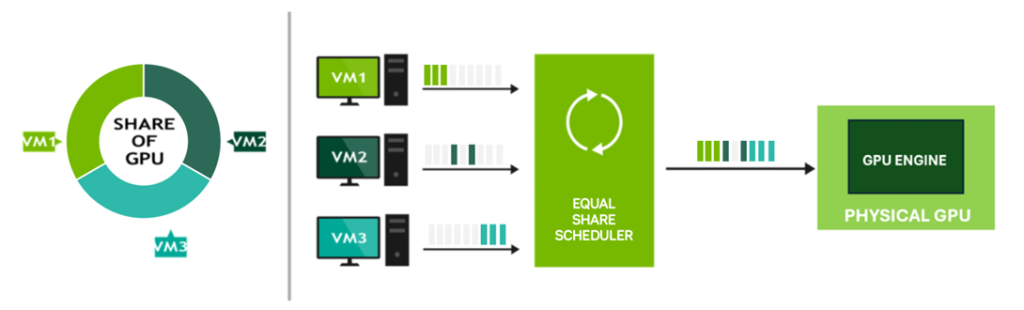

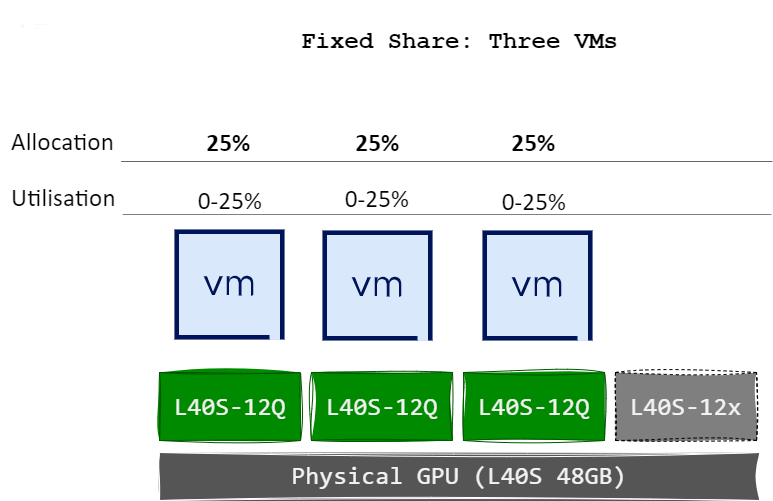

The diagram below illustrates how each virtual machines vGPUs receives a fixed allocation of the pGPU’s compute capacity based on the defined slice size. For example, when a single vGPU/VM is assigned a 12 GB profile on an NVIDIA L40S GPU (which has 48 GB of total vRAM), it is allocated 25% of the pGPU’s compute capability. This is because the 48 GB GPU can accommodate a maximum of four 12 GB vGPU profiles – dividing the GPU into four equal slices of 25% each.

When two more vGPUs/VMs are assigned (has to use the same profile), it is also allocated 25% of the GPU’s compute resources, maintaining consistent performance allocation.

The diagram below illustrates a scenario where compute resources are allocated equally between vGPUs. If a virtual machine does not fully consume its vGPU allocation, either because it is powered off or not actively utilising GPU compute, idle time occurs on the pGPU, resulting in underutilisation. However, this scheduling policy avoids the “noisy neighbour” effect, as each vGPU is guaranteed a fixed share of the pGPU’s resources, regardless of other workloads.

Time-Slice Duration

Both the Equal Share and Fixed Share policies support configuration of the time-slice duration—the length of time a vGPU is scheduled to run before the next vGPU takes over.

There are two time-slicing approaches:

1. Strict Round-Robin Enabled

In this mode, two parameters control scheduling:

- Scheduling Frequency: The number of times per second the GPU scheduler switches between vGPUs.

- Averaging Factor: Determines the moving average used to manage time-slice overshoots for each vGPU. A higher averaging factor results in a more relaxed enforcement of the scheduling frequency, while a lower value enforces stricter scheduling.

2. Strict Round-Robin Disabled

When disabled, scheduling is controlled via the Time Slice Length, defined in milliseconds. This specifies how long each vGPU can execute before the next is scheduled.

- Minimum: 1 ms

- Maximum: 30 ms

NVIDIA Recommendations:

| Workload Type | Suggested Time Slice |

|---|---|

| Latency-sensitive (e.g. graphics) | Shorter time slice for improved responsiveness |

| Throughput-optimised (e.g. AI, ML) | Longer time slice to reduce context switching overhead |

Conclusion

Effective GPU resource management is essential in virtualised environments, especially when aiming to maximise performance and return on investment. NVIDIA’s Time-Slicing Scheduling Policies—Best Effort, Equal Share, and Fixed Share—each offer distinct advantages depending on your workload requirements and infrastructure goals.

- Use Best Effort for flexibility and maximum utilisation.

- Choose Equal Share for fairness with mixed workloads.

- Opt for Fixed Share when predictability and consistency are essential.

- If your workload is AI/CUDA use either Equal Share or Fixed Share

Understanding how these policies operate—and how to fine-tune time-slice durations—empowers you to optimise GPU usage, minimise contention, and deliver consistent performance across all virtualised workloads.

As GPU demand continues to grow, selecting the right scheduling policy is key to maintaining both efficiency and user experience in your environment.