What is vSphere Supervisor?

vSphere Supervisor allows you to implement a consistent declarative API within vSphere, supporting the development of modern cloud experiences through VCF Automation. Once enabled on vSphere clusters, it enables workloads to be operated declaratively, this includes provisioning and managing upstream Kubernetes clusters, virtual machines, and vSphere Pods. Workload execution is organized within defined resource boundaries known as vSphere Namespaces.

The Challenges of Today’s Application Stack

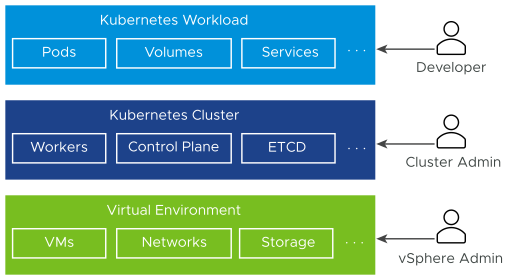

Modern distributed systems are typically composed of numerous microservices operating across many Kubernetes pods and virtual machines. In environments that do not utilize vSphere Supervisor, the architecture generally includes a virtualized infrastructure layer, with Kubernetes clusters deployed within virtual machines, and the corresponding pods running inside those VMs. Managing this kind of setup often involves three distinct roles: application developers, Kubernetes administrators, and infrastructure or cloud administrators, each responsible for their own segment of the stack.

Today’s Application Stack

Each role within the infrastructure stack operates in isolation, with limited visibility or control over the other layers:

- Application developers are responsible for deploying and managing Kubernetes-based applications and pods, but they typically lack insight into the full infrastructure stack that supports these workloads.

- DevOps engineers or Kubernetes administrators manage the Kubernetes platform itself, but they often don’t have the necessary tools to monitor or troubleshoot issues within the underlying virtual infrastructure, particularly when it comes to resource constraints.

- vSphere or cloud administrators oversee the virtualized infrastructure, yet they do not have visibility into the Kubernetes layer—specifically, how Kubernetes objects are distributed within the virtual environment or how those resources are being consumed.

Coordinating operations across the entire stack can be complex and inefficient, often requiring collaboration between all three roles. This lack of integration across the layers presents additional challenges. For instance, because the Kubernetes scheduler is unaware of the vCenter inventory, it cannot make optimal decisions about pod placement.

How Does vSphere Supervisor Help?

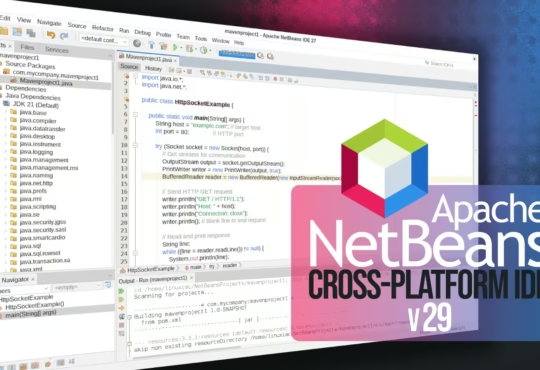

vSphere Supervisor provides a declarative API and desired state by deploying a Kuberentes control plane directly on vSphere clusters. As a vSphere administrator, you activate existing vSphere clusters for

vSphere Supervisor, thus creating a Kubernetes layer within the clusters.

Integrating a Kubernetes control plane directly into vSphere clusters unlocks a range of capabilities within the vSphere environment:

- For vSphere administrators, it becomes possible to create and configure vSphere Namespaces—logical resource boundaries within the Supervisor. These namespaces can be allocated specific amounts of CPU, memory, and storage, and are then made available to DevOps teams.

- DevOps engineers can leverage these namespaces to run Kubernetes workloads using shared resource pools. They have the ability to deploy and manage multiple upstream Kubernetes clusters using the vSphere Kubernetes Service (VKS), run containerized applications directly on the Supervisor via specialized virtual machines known as vSphere Pods, and also deploy traditional virtual machines as needed.

- vSphere administrators can monitor and manage all vSphere Pods, virtual machines, and Kubernetes clusters provisioned through VKS using the vSphere Client. This provides comprehensive visibility into resource usage, object placement, and operations across various namespaces.

Running Kubernetes natively on vSphere clusters enhances collaboration between infrastructure administrators and DevOps teams, as both operate on the same platform and interact with shared infrastructure components.

What Is a Workload?

In vSphere Supervisor, workloads are applications deployed in one of the following ways:

- Applications that consist of containers running inside vSphere Pods.

- Workloads provisioned through the VM service.

- Kubernetes clusters deployed by using vSphere Kubernetes Service (VKS)

- Applications that run inside VKS clusters.

What are vSphere Zones?

vSphere Zones enhance workload availability on vSphere Supervisor by safeguarding against failures at the cluster level. As a vSphere administrator, you can define vSphere Zones within the vSphere Client and associate individual vSphere clusters with these zones.

Zones can also be used to separate the Supervisor’s control plane from the workloads it manages. For example:

- You can deploy the Supervisor across one or three designated vSphere Zones reserved specifically for its control plane. These are labeled as management zones in the vSphere Client. When spread across three zones, the Supervisor’s control plane virtual machines are distributed accordingly, providing cluster-level high availability. Deploying it in just one zone, however, limits availability to the host level.

- Separately, you can assign up to three vSphere Zones to a vSphere Namespace, which are then used exclusively for running workloads.

What is vSphere Kubernetes Service or VKS Cluster?

The vSphere Kubernetes Service (vSphere KS) enables you to provision upstream Kubernetes clusters directly on vSphere Supervisor. These clusters, known as VKS clusters, represent complete Kubernetes distributions that are developed, digitally signed, and officially supported by VMware by Broadcom.

Key features of a VKS cluster include:

VKS characteristics

Streamlined Kubernetes Deployment

vSphere Kubernetes Service (vSphere KS) delivers a curated Kubernetes installation experience tailored for the vSphere environment. It comes with preconfigured, optimized defaults, minimizing the time and complexity typically required to deploy and manage an enterprise-ready Kubernetes cluster.

Deep Integration with vSphere Infrastructure

VKS clusters are tightly coupled with the vSphere Software-Defined Data Center (SDDC) stack, integrating with core services such as storage, networking, and authentication. These clusters run on top of the Supervisor, which is directly mapped to underlying vSphere clusters, creating a unified and seamless operational experience.

Enterprise-Grade and Production-Ready

VKS clusters are provisioned with production use in mind, requiring minimal setup. They support high availability, allow rolling upgrades of Kubernetes, and let you run different Kubernetes versions in isolated clusters enabling flexible and resilient operations for enterprise workloads.

High Availability Across Zones

When VKS clusters are deployed across two or three designated vSphere Zones reserved for workloads, both control plane and worker nodes are distributed across those zones. This design protects against failures at the vSphere cluster level. For clusters deployed in a single zone, protection is provided at the ESXi host level through native vSphere HA.

End-to-End Support from VMware

Since VKS clusters are based on VMware’s Linux OS, run on vSphere infrastructure, and operate on ESXi hosts, VMware provides comprehensive support for the entire stack. Whether issues arise in the hypervisor or the Kubernetes layer, a single support channel is available.

Kubernetes-Native Management

Built on the Supervisor, which itself is a Kubernetes cluster, VKS clusters are declared and managed using Kubernetes-native tools. You define clusters using custom resources within vSphere Namespaces and deploy them through familiar tooling such as kubectl and the VCF CLI. This unified approach ensures consistency throughout your DevOps toolchain from cluster provisioning to workload deployment.

What Is a vSphere Pod?

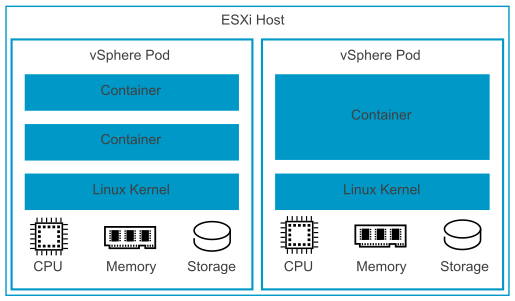

The vSphere Supervisor introduces a unique concept known as a vSphere Pod, which serves a similar purpose to a standard Kubernetes pod. A vSphere Pod is a lightweight virtual machine designed to run one or more Linux containers. It is precisely sized for the specific workload it hosts, with dedicated allocations for CPU, memory, and storage to meet the workload’s requirements. This ensures predictable performance and efficient resource utilization. vSphere Pods are available only when the Supervisor is deployed with NSX as the networking stack.

vSphere Pods

vSphere Pods are objects in

vCenter and enable the following capabilities for workloads:

- Strong isolation. A vSphere Pod is isolated in the same manner as a virtual machine. Each vSphere Pod has its own unique Linux kernel that is based on the kernel used in Photon OS. Rather than many containers sharing a kernel, as in a bare metal configuration, in a vSphere Pod, each container has a unique Linux kernel

- Resource Management. vSphere Distributed Resource Schedular (DRS) handles the placement of vSphere Pods on the Supervisor.

- High performance. vSphere Pods get the same level of resource isolation as VMs, eliminating noisy neighbor problems while maintaining the fast start-up time and low overhead of containers.

- Diagnostics. As a vSphere administrator you can use all the monitoring and introspection tools that are available with vSphere on workloads.

vSphere Pods are Open Container Initiative (OCI) compatible and can run containers from any operating system as long as these containers are also OCI compatible.

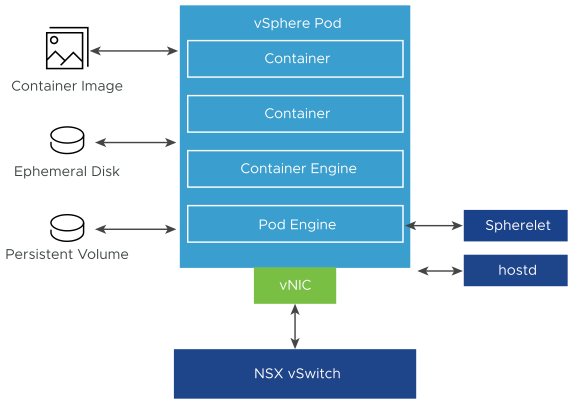

vSphere Pod Networking and Storage

vSphere Pods utilize three different types of storage depending on the data being stored: ephemeral VMDKs, persistent volume VMDKs, and container image VMDKs. As a vSphere administrator, you set storage policies at the Supervisor level to manage the placement of container image caches and ephemeral VMDKs. At the vSphere Namespace level, you configure storage policies specifically for persistent volumes. For more information on storage requirements and concepts within vSphere Supervisor, refer to the documentation on Persistent Storage for Workloads.

Regarding networking, both vSphere Pods and virtual machines within VKS clusters rely on the NSX-provided network topology. For more detailed information, see the section on Supervisor Networking.

Additionally, an essential component called Spherelet runs on each ESXi host. Spherelet is a native implementation of the Kubernetes kubelet, enabling the ESXi host itself to participate as a node within the Kubernetes cluster.

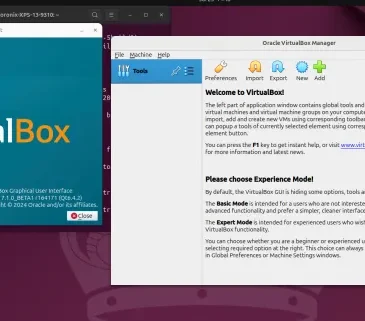

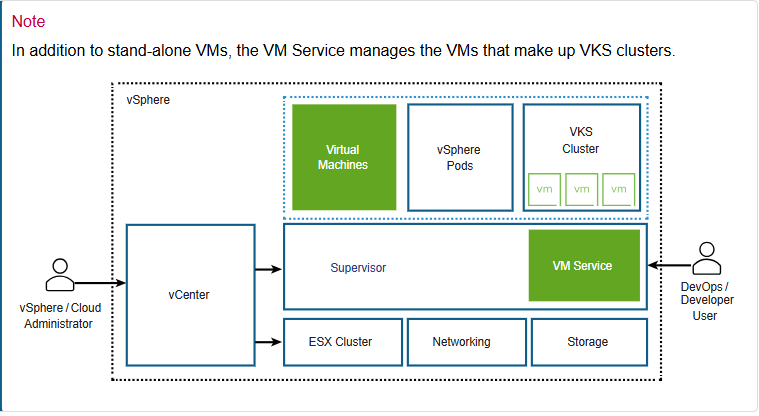

Using Virtual Machines in vSphere Supervisor

vSphere Supervisor offers a VM Service functionality that enables DevOps engineers to deploy and run VMs in a declarative manner, in addition to containers, in a common, shared Kubernetes environment. Both, containers and VMs, share the same

vSphere Namespace resources and can be managed through a single

vSphere Supervisor interface.

The VM Service addresses the needs of DevOps teams that use Kubernetes, but have existing VM-based workloads that cannot be easily containerized. It also helps users reduce the overhead of managing a non-Kubernetes platform alongside a container platform. When running containers and VMs on a Kubernetes platform, DevOps teams can consolidate their workload footprint to just one platform.

Each VM deployed through the VM Service functions as a complete machine running all the components, including its own operating system, on top of the

vSphere Supervisor infrastructure. The VM has access to networking and storage that a

Supervisor provides, and is managed using the standard Kubernetes

kubectl command. The VM runs as a fully isolated system that is immune to interference from other VMs or workloads in the Kubernetes environment.

When to Use Virtual Machines on a Kubernetes Platform?

Generally, a decision to run workloads in a container or in a VM depends on your business needs and goals. Among the reasons to use VMs appear the following:

- Your applications cannot be containerized.

- Applications are designed for a custom kernel or custom operating system.

- Applications are better suited to running in a VM.

- You want to have a consistent Kubernetes experience and avoid overhead. Rather than running separate sets of infrastructure for your non-Kubernetes and container platforms, you can consolidate these stacks and manage them with a familiar kubectl command.

- Running VMs that are deployed and managed by VCF Automation within the All Applications Organization setup.

Supervisor Services in vSphere Supervisor

Supervisor Services are vSphere certified Kubernetes operators that deliver Infrastructure-as-a-Service components and tightly-integrated Independent Software Vendor services to developers. You can install and manage Supervisor Services on the vSphere Supervisor environment so that to make them available for use with Kubernetes workloads. When Supervisor Services are installed on Supervisors, DevOps engineers can use the service APIs to create instances on Supervisors in their user namespaces. These instances can then be consumed in vSphere Pods and VKS clusters.