Introduction

Storage is one of the core components of any virtual infrastructure. In VMware vSphere, understanding different types of storage technologies and how they interact with ESXi hosts is crucial for optimal performance, scalability, and availability.

In vSphere, understanding how storage is provisioned, accessed, and managed by ESXi hosts is essential to designing and maintaining an efficient data center. Whether you’re working with local disks, SANs, NAS, or cloud-attached storage, the choice of storage technology directly impacts the cost, complexity, flexibility, and reliability of your environment.

Whether you’re a system administrator deploying virtual machines, a cloud engineer architecting scalable infrastructure, or a virtualization enthusiast exploring best practices, this comprehensive guide will help you understand and differentiate between the major VMware vSphere storage technologies VMFS, NFS, vSAN, vVols, and RDM.

VMFS (Virtual Machine File System) VMFS?

Let’s imagine you have a shared folder on your school or office network that many people can access at the same time. Everyone can read and write to that folder, and it works smoothly without people stepping on each other’s toes. That’s the basic idea behind VMFS but for virtual machines!

VMFS, or Virtual Machine File System, is a special type of clustered file system designed by VMware. It’s used to store virtual machines and their files on block-based storage such as SAN (Storage Area Network), iSCSI, or local disks directly attached to the server.

It’s different from normal file systems like NTFS or FAT32. VMFS is built to handle multiple ESXi hosts accessing the same files at the same time, which is essential in shared environments like VMware clusters.

Key Characteristics

| Feature | Explanation |

|---|---|

| Clustered Access | Multiple ESXi hosts can use the same VMFS datastore simultaneously without conflict. |

| Supports Snapshots | VM snapshots allow you to capture the state of a VM so you can roll back if needed. |

| Compatible with vMotion & Storage vMotion | You can migrate live VMs between hosts or move their files between datastores. |

| Versions | The latest version, VMFS-6, offers better performance and supports larger capacities than VMFS-5. |

Note: Always use VMFS-6 if possible, for new datastores, as it offers better space efficiency and support for 4K drives.

Let’s say your organization has a Fibre Channel SAN (very fast shared storage) that multiple ESXi hosts connect to. You want these hosts to run and manage VMs from that central location. Instead of copying VMs to every host, you format that SAN LUN with VMFS, allowing all hosts to see and use the same VMs.

This makes it ideal for:

- HA (High Availability) Clusters

- vMotion-enabled environments

- Shared storage scenarios

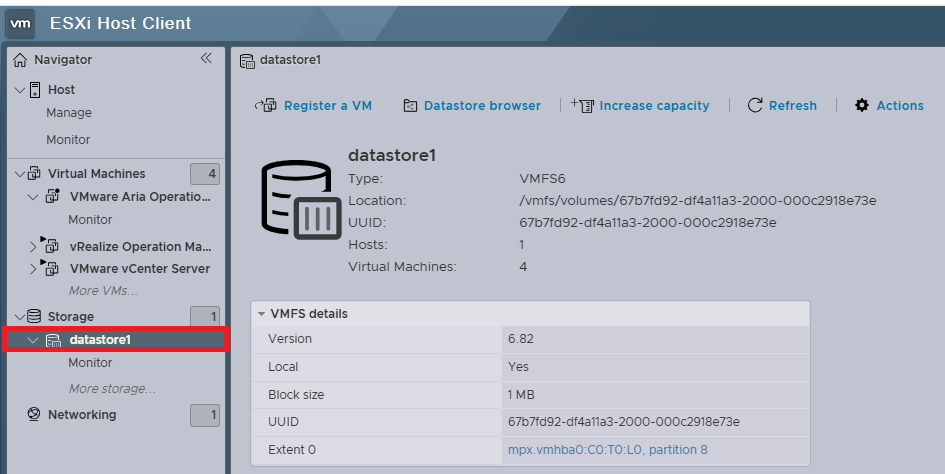

How to Create a VMFS Datastore

Let’s walk through how you’d create a VMFS datastore step-by-step using the vSphere Client:

Login to your vSphere Web Client.

Use your browser and access vCenter or ESXi host.

Navigate to Storage:

On the left pane, click on Storage. You’ll see a list of available datastores.

Click on Create New Datastore:

Choose VMFS as the type. You’ll be prompted to select a device (disk/LUN) to format.

If there are no unused disks or LUNs available, ESXi won’t show the Create New Datastore option.

Choose the Disk and File System Version:

Select an unused disk or LUN. Pick VMFS-6 as the version (unless you have a reason to use VMFS-5).

Name and Format:

Give the datastore a name (e.g., Prod_VMFS_01). Click Finish, and the ESXi host will create the file system.

NFS (Network File System)

This protocol is commonly used for sharing files across a network and is particularly significant in virtualized environments like VMware. NFS is a file-level storage protocol that allows vSphere to connect to shared storage over TCP/IP. This functionality is vital for enterprises that rely on shared storage systems to ensure seamless operations across multiple virtual machines and hosts.

Key Characteristics:

- Easy to set up and manage.

- Network-Based Storage

- Does not require storage multipathing configuration.

- NFS versions 3 and 4.1 are supported.

- High Availability

- Cross-Platform Compatibility

- Security Features

- Snapshot and Backup Capabilities

Tutorial:

Go to Storage > New Datastore > NFS.

Choose NFS 3 or 4.1 and enter the server IP and share path.

Mount the volume to ESXi.

When to Use NFS in VMware vSphere

NFS is often a preferred choice for small to medium-sized environments due to its low-cost setup and flexibility. If you’re building a vSphere Cluster (for example, using vSphere HA or vSphere DRS), NFS provides a simple and reliable shared storage solution. For many standard workloads, NFS offers sufficient performance, making it an excellent choice for virtual desktops, development environments, and test workloads.

Read More About NFS Installation and Configuration Guide

VMware vSAN (Virtual SAN)

What is it?

vSAN aggregates local disks of ESXi hosts into a shared datastore using software-defined storage.

Key Characteristics:

- Integrated into the ESXi kernel.

- Supports policies for performance, availability, and space efficiency.

- Requires a minimum of 3 hosts (4 for production).

Example Use Case:

Hyper-converged infrastructure with no traditional SAN/NAS.

Tutorial:

Enable vSAN under Cluster > Configure > vSAN > Services.

Choose between Single Site Cluster, 2-Node, or Stretched Cluster.

Assign disk groups to each host.

vVols (Virtual Volumes)

What is it?

vVols provide VM-granular storage management by allowing virtual machines to interact directly with SAN/NAS devices through a VASA provider.

Key Characteristics:

- Fine-grained policy control over storage services.

- Each virtual disk is a separate volume.

- Reduces LUN management overhead.

Example Use Case:

Enterprises requiring per-VM storage policies across multiple tiers.

Tutorial:

- Register the VASA provider from your array vendor.

- Create a Storage Container.

- Assign VM Storage Policies to use vVol-based datastores.

RDM (Raw Device Mapping)

What is it?

RDM allows a virtual machine to access a physical LUN directly.

Key Characteristics:

- Useful for clustering solutions (like MSCS).

- Two modes: Virtual Compatibility Mode and Physical Compatibility Mode.

- Bypasses the file system layer for direct device access.

Example Use Case:

Pass-through storage access for a VM running Microsoft SQL Server in a Windows Cluster.

Tutorial:

Right-click VM > Edit Settings > Add Hard Disk > RDM Disk.

Select the LUN and desired compatibility mode.

Storage vMotion

What is it?

Storage vMotion enables live migration of VM disk files across different storage datastores without downtime.

Key Characteristics:

- Useful for balancing storage load.

- No service disruption to running VMs.

- Can be used via vSphere Web Client or PowerCLI.

Example Use Case:

Migrating VMs from slower storage to SSD-backed datastore.

Tutorial:

- Right-click VM > Migrate > Change Storage Only.

- Select the target datastore and begin migration.

iSCSI & Fibre Channel

What is it?

These are block-based protocols for accessing centralized storage over network or fiber.

Key Characteristics:

- iSCSI runs over TCP/IP (cheaper, flexible).

- Fibre Channel runs over dedicated fabric (faster, reliable).

- Requires proper initiator configuration on ESXi.

Tutorial (iSCSI):

Go to Storage Adapters > Add Software iSCSI Adapter.

Add the target IP in Dynamic Discovery.

Rescan adapters and create datastore.

Storage Policies and Tags

vSphere allows admins to define storage policies to ensure VMs are provisioned on compatible storage with appropriate characteristics (like IOPS, availability, encryption).

Tutorial:

Go to Policies and Profiles > VM Storage Policies.

Define rules based on tags or capabilities.

Apply the policy during VM creation.

Extra Tip: Hardware Compatibility with VMware Storage

Always verify storage hardware (arrays, controllers, drivers) against VMware’s Hardware Compatibility List (HCL) before deployment. This avoids potential failures and ensures support for advanced features like vSAN or vVols.

Conclusion

VMware vSphere provides a robust ecosystem of storage technologies that cater to various performance, scalability, and operational needs. Whether you’re using VMFS, vSAN, vVols, or NFS, knowing when and how to use each option ensures a reliable and efficient virtual infrastructure.

Mastering vSphere storage options is a must-have skill for any IT professional working in virtualization. Start with lab environments to test each type and see how they impact your workload behavior and performance.

- Design